I’ve been getting complaints that my lemmas have not been so lovely (or little) lately, so let’s do something a bit more down to earth. This is a story I learned from the book Counterexamples in topology by Steen and Seebach [SS].

A topological space  comes equipped with various operations on its power set

comes equipped with various operations on its power set  . For instance, there are the maps

. For instance, there are the maps  (interior),

(interior),  (closure), and

(closure), and  (complement). These interact with each other in nontrivial ways; for instance

(complement). These interact with each other in nontrivial ways; for instance  .

.

Consider the monoid  generated by the symbols

generated by the symbols  (interior),

(interior),  (closure), and

(closure), and  (complement), where two words in

(complement), where two words in  ,

,  , and

, and  are identified if they induce the same action on subsets of an arbitrary topological space

are identified if they induce the same action on subsets of an arbitrary topological space  .

.

Lemma. The monoid  has 14 elements, and is the monoid given by generators and relations

has 14 elements, and is the monoid given by generators and relations

![Rendered by QuickLaTeX.com \[M = \left\langle a,b,c\ \left|\ \begin{array}{cc} a^2=a, & (ab)^2=ab, \\ c^2=1, & cac=b.\end{array}\right.\right\rangle.\]](http://lovelylittlelemmas.rjprojects.net/wp-content/ql-cache/quicklatex.com-107e423b90c7869e548d1339c52c74c2_l3.svg)

Proof. The relations  ,

,  , and

, and  are clear, and conjugating the first by

are clear, and conjugating the first by  shows that

shows that  is already implied by these. Note also that

is already implied by these. Note also that  and

and  are monotone, and

are monotone, and  for all

for all  . A straightforward induction shows that if

. A straightforward induction shows that if  and

and  are words in

are words in  and

and  , then

, then  . We conclude that

. We conclude that

![Rendered by QuickLaTeX.com \[abab(S) \subseteq abb(S) = ab(S) = aab(S) \subseteq abab(S),\]](http://lovelylittlelemmas.rjprojects.net/wp-content/ql-cache/quicklatex.com-cef37cdb9f5ca64ad8b8a2d5965ff8b7_l3.svg)

so  (this is the well-known fact that

(this is the well-known fact that  is a regular open set). Conjugating by

is a regular open set). Conjugating by  also gives the relation

also gives the relation  (saying that

(saying that  is a regular closed set).

is a regular closed set).

Thus, in any reduced word in  , no two consecutive letters agree because of the relations

, no two consecutive letters agree because of the relations  ,

,  , and

, and  . Moreover, we may assume all occurrences of

. Moreover, we may assume all occurrences of  are at the start of the word, using

are at the start of the word, using  and

and  . In particular, there is at most one

. In particular, there is at most one  in the word, and removing that if necessary gives a word containing only

in the word, and removing that if necessary gives a word containing only  and

and  . But reduced words in

. But reduced words in  and

and  have length at most 3, as the letters have to alternate and no string

have length at most 3, as the letters have to alternate and no string  or

or  can occur. We conclude that

can occur. We conclude that  is covered by the 14 elements

is covered by the 14 elements

![Rendered by QuickLaTeX.com \[\begin{array}{ccccccc}1, & a, & b, & ab, & ba, & aba, & bab,\\c, & ca, & cb, & cab, & cba, & caba, & cbab. \end{array}\]](http://lovelylittlelemmas.rjprojects.net/wp-content/ql-cache/quicklatex.com-ac64f82945759d486e7f3d9cadc4eb2a_l3.svg)

To show that all 14 differ, one has to construct, for any  in the list above, a set

in the list above, a set  in some topological space

in some topological space  such that

such that  . In fact we will construct a single set

. In fact we will construct a single set  in some topological space

in some topological space  where all 14 sets

where all 14 sets  differ.

differ.

Call the sets  for

for  the noncomplementary sets obtained from

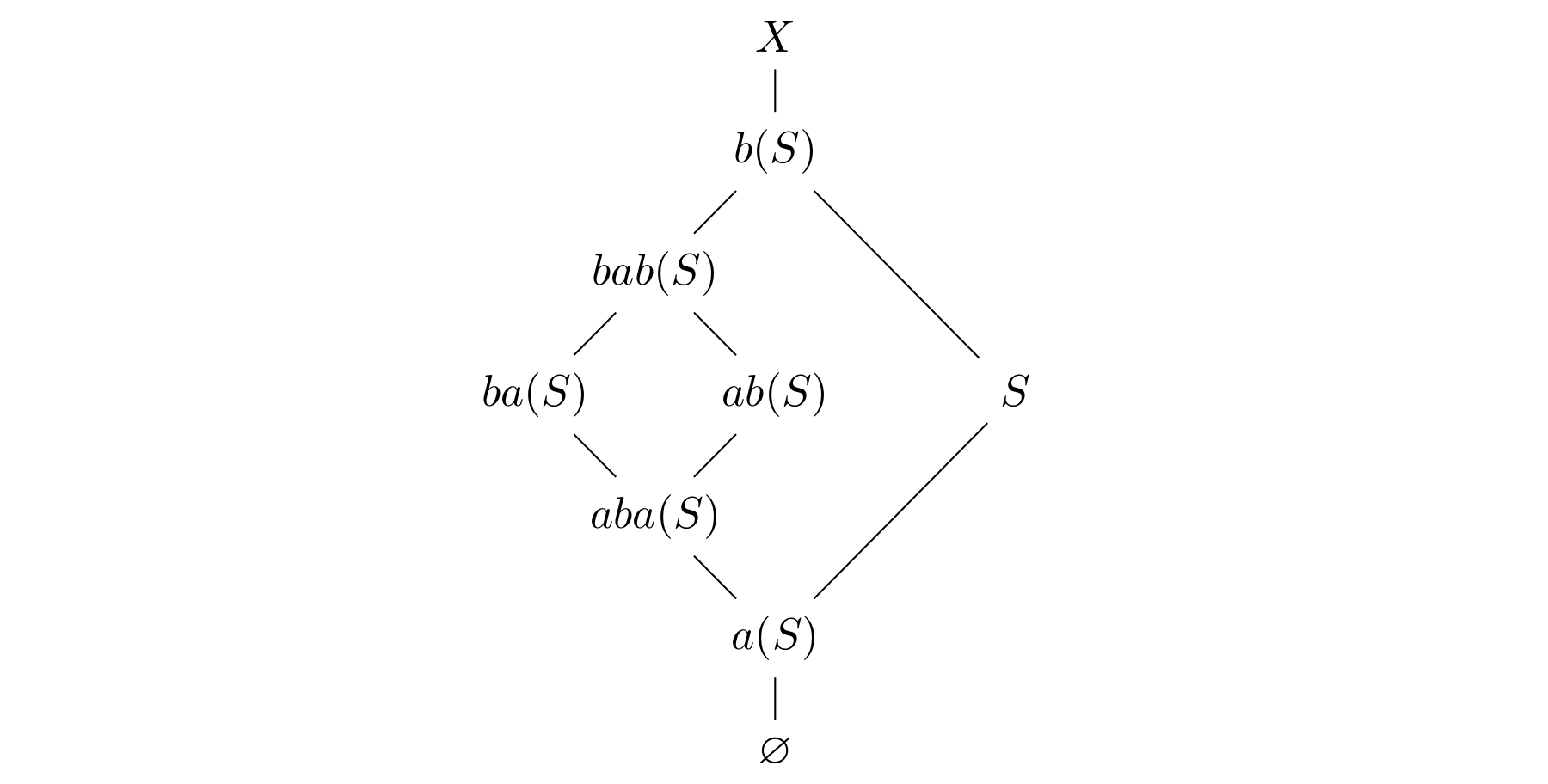

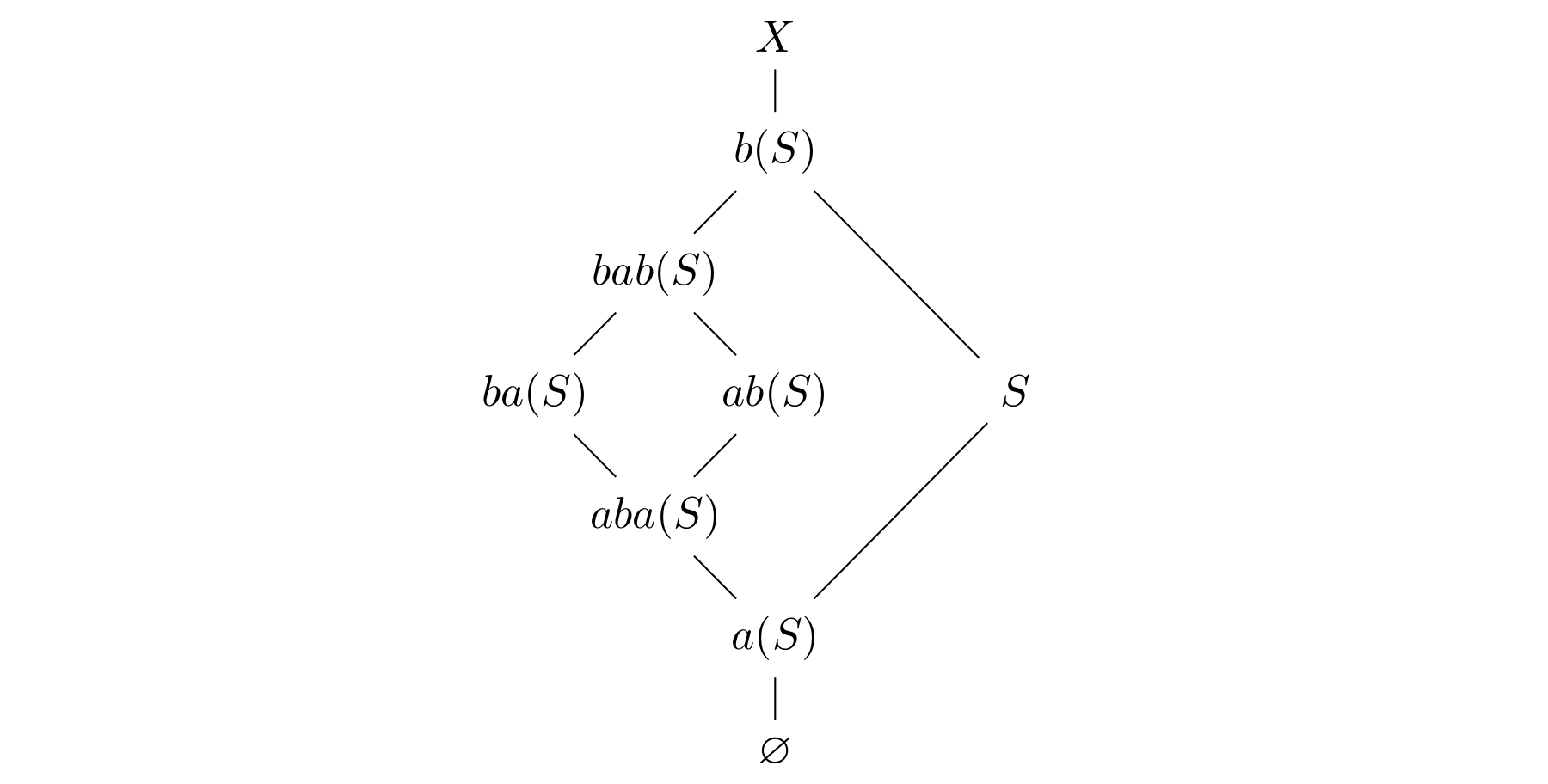

the noncomplementary sets obtained from  , and their complements the complementary sets. By the arguments above, the noncomplementary sets always satisfy the following inclusions:

, and their complements the complementary sets. By the arguments above, the noncomplementary sets always satisfy the following inclusions:

It suffices to show that the noncomplementary sets are pairwise distinct: this forces

It suffices to show that the noncomplementary sets are pairwise distinct: this forces  (otherwise

(otherwise  ), so each noncomplementary set contains

), so each noncomplementary set contains  and therefore cannot agree with a complementary set.

and therefore cannot agree with a complementary set.

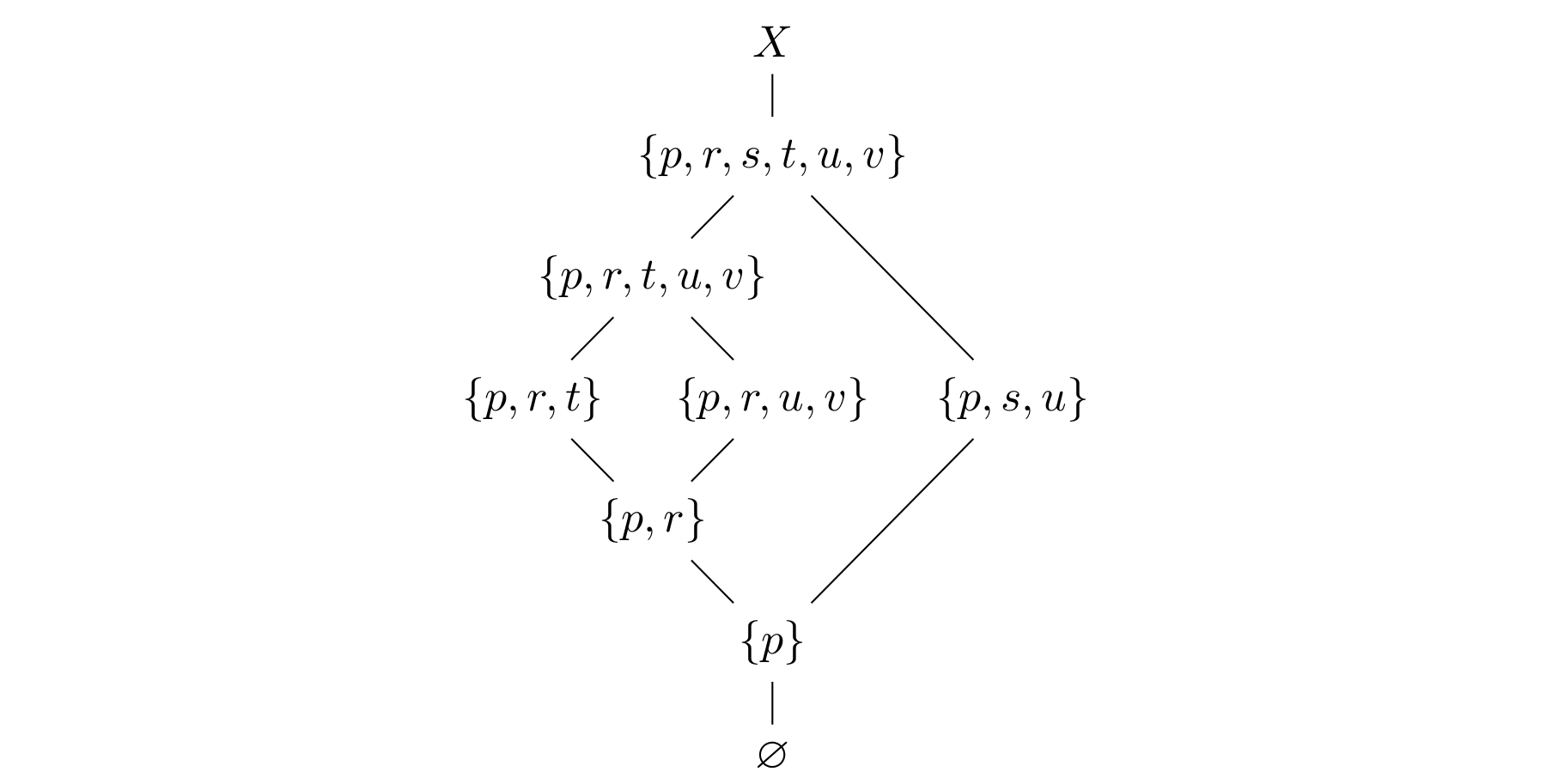

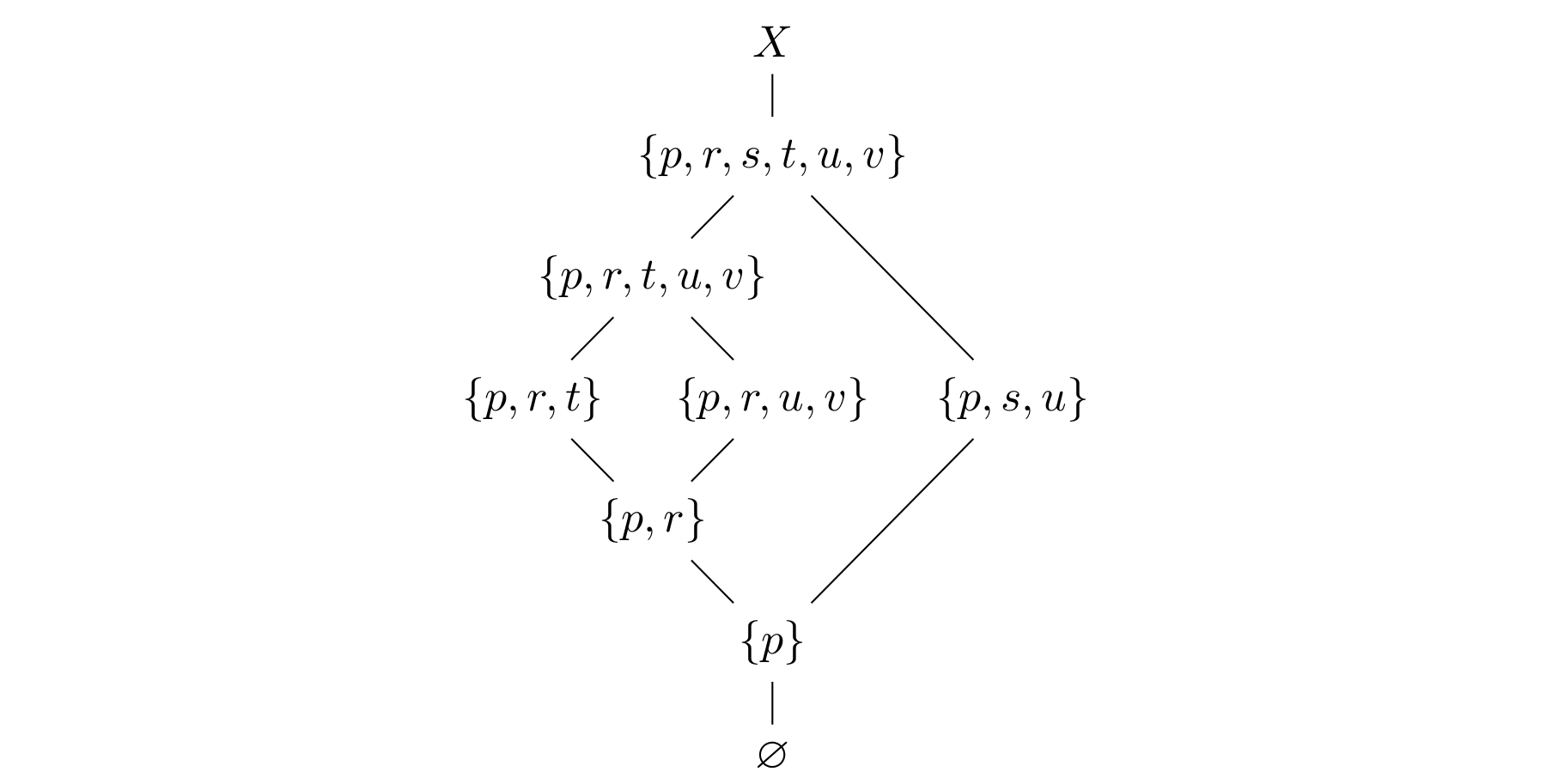

For our counterexample, consider the 5 element poset  given by

given by

![Rendered by QuickLaTeX.com \[\begin{array}{ccccc}p\!\!\! & & & & \!\!\!q \\ \uparrow\!\!\! & & & & \!\!\!\uparrow \\ r\!\!\! & & & & \!\!\!s \\ & \!\!\!\nwarrow\!\!\! & & \!\!\!\nearrow\!\!\! & \\ & & \!\!\!t,\!\!\!\!\! & & \end{array}\]](http://lovelylittlelemmas.rjprojects.net/wp-content/ql-cache/quicklatex.com-fd07751615877f22d5bd099f23612bc4_l3.svg)

and let  be the disjoint union of

be the disjoint union of  (the Alexandroff topology on

(the Alexandroff topology on  , see the previous post) with a two-point indiscrete space

, see the previous post) with a two-point indiscrete space  . Recall that the open sets in

. Recall that the open sets in  are the upwards closed ones and the closed sets the downward closed ones. Let

are the upwards closed ones and the closed sets the downward closed ones. Let  . Then the diagram of inclusions becomes

. Then the diagram of inclusions becomes  We see that all 7 noncomplementary sets defined by

We see that all 7 noncomplementary sets defined by  are pairwise distinct.

are pairwise distinct.

Remark. Steen and Seebach [SS, Example 32(9)] give a different example where all 14 differ, namely a suitable subset  . That is probably a more familiar type of example than the one I gave above.

. That is probably a more familiar type of example than the one I gave above.

On the other hand, my example is minimal: the diagram of inclusions above shows that for all inclusions to be strict, the space  needs at least 6 points. We claim that 6 is not possible either:

needs at least 6 points. We claim that 6 is not possible either:

Lemma. Let  be a finite

be a finite  topological space, and

topological space, and  any subset. Then

any subset. Then  and

and  .

.

But in a 6-element counterexample  , the diagram of inclusions shows that any point occurs as the difference

, the diagram of inclusions shows that any point occurs as the difference  for some

for some  . Since each of

. Since each of  and

and  is either open or closed, we see that the naive constructible topology on

is either open or closed, we see that the naive constructible topology on  is discrete, so

is discrete, so  is

is  by the remark from the previous post. So our lemma shows that

by the remark from the previous post. So our lemma shows that  cannot be a counterexample.

cannot be a counterexample.

Proof of Lemma. We will show that  ; the reverse implication was already shown, and the result for

; the reverse implication was already shown, and the result for  follows by replacing

follows by replacing  with its complement.

with its complement.

In the previous post, we saw that  is the Alexandroff topology on some finite poset

is the Alexandroff topology on some finite poset  . If

. If  is any nonempty subset, it contains a maximal element

is any nonempty subset, it contains a maximal element  , and since

, and since  is a poset this means

is a poset this means  for all

for all  . (In a preorder, you would only get

. (In a preorder, you would only get  .)

.)

The closure of a subset  is the lower set

is the lower set  , and the interior is the upper set

, and the interior is the upper set  . So we need to show that if

. So we need to show that if  and

and  , then

, then  .

.

By definition of  , we get

, we get  , i.e. there exists

, i.e. there exists  with

with  . Choose a maximal

. Choose a maximal  with this property; then we claim that

with this property; then we claim that  is a maximal element in

is a maximal element in  . Indeed, if

. Indeed, if  , then

, then  so

so  , meaning that there exists

, meaning that there exists  with

with  . Then

. Then  and

and  , which by definition of

, which by definition of  means

means  . Thus

. Thus  is maximal in

is maximal in  , hence

, hence  since

since  and

and  . From

. From  we conclude that

we conclude that  , finishing the proof.

, finishing the proof.

The lemma fails for non- spaces, as we saw in the example above. More succinctly, if

spaces, as we saw in the example above. More succinctly, if  is indiscrete and

is indiscrete and  , then

, then  and

and  . The problem is that

. The problem is that  is maximal, but

is maximal, but  and

and  .

.

References.

[SS] L.A. Steen, J.A. Seebach, Counterexamples in Topology. Reprint of the second (1978) edition. Dover Publications, Inc., Mineola, NY, 1995.

be a field and

a nilpotent matrix. Then

.

is upper triangular. Here is a slick basis-independent commutative algebra proof that shows something better:

be a commutative ring with nilradical

, and let

be a nilpotent matrix. Then the characteristic polynomial

satisfies

for the polynomials in

of degree smaller than

whose coefficients lie in a given ideal

.

. When

is a domain, this reduces to

, and the lemma just says that

.

but with its anadrome (or reciprocal)

.

. Since

is nilpotent, there exists

with

, so

. Thus

. Evaluating at

shows that the constant coefficient is 1.